Building a Deep Learning Rig

slightly less GPU poor

February 12, 2025

While procrastinating on preparing for final exams last semester, I was scrolling on Craigslist and came across a $1.1k AI workstation deal. Here were the specs:

- Motherboard: Asus ROG STRIX Z490-G

- CPU: Intel Core i9-10850K (3.6GHz, 10 cores, 20 threads)

- CPU Cooler: Noctua NH-U12A-P

- RAM: 64GB (2 x Kingston DDR4-3200)

- GPU: PNY GeForce RTX 3090 XLR8 REVEL 24GB

- SSD: Samsung 970 EVO Plus 2TB M.2 SSD

- Case: Fractal Design Define Mini C

- Power Supply: Super Flower LEADEX Platinum 850W

- 3x Noctua 120mm NF-F12 PWM Fans

In late 2024 / early 2025, RTX 3090 prices on the used market are still on average ~$700. If we fix this GPU cost, I figured the remaining parts would certainly be worth more than $400. I had to cop.

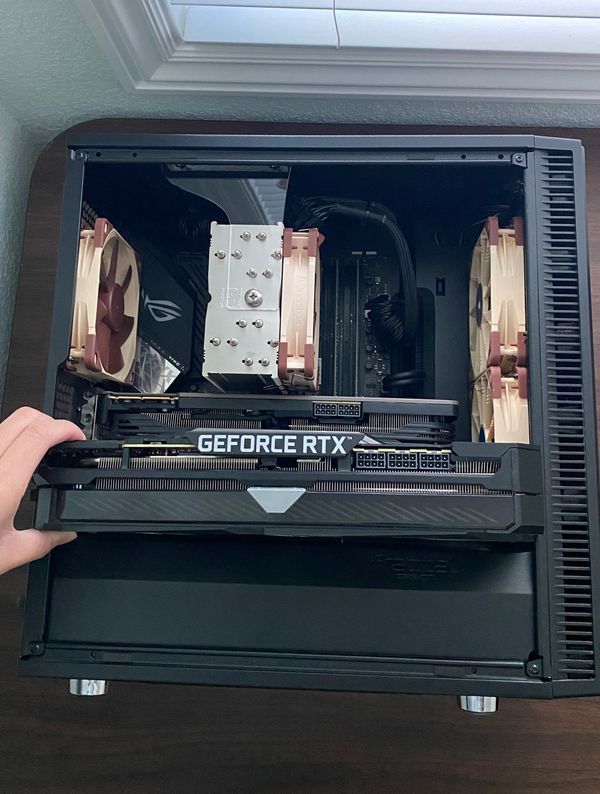

A few days after purchasing the workstation, I came across a used ASUS ROG Strix GeForce RTX 3090 for $500. The heatsink was slightly rusted since the owner had been using his gaming PC on his patio in a humid environment (lol ikr), but since it was functioning just fine I decided to also cop.

Although 3090s are now 3 generations old, they still remain the only consumer card (other than 4090) with 24GB VRAM. My main goal is to have a dev machine for early prototyping of research ideas, and as needed I can scale up to large GPU clusters.

hacky solutions

Now that I had all the hardware, I realized I had a few issues.

-

an 850w PSU may not be sufficient for 2x RTX 3090s

-

the case was not large enough to fit the new GPU, both in terms of length and height. this is due to having an mATX case and longer GPU

-

the mATX motherboard has front panel header and case fan pins at the bottom. the additional GPU in the second PCIe slot would not clear the the cables

GPU too fat

In regards to (1), a lot of people recommend upgrading to a 1000w+ PSU for dual RTX 3090 setups. Since I’m too lazy and don’t want to spend any more money on a new PSU, I decided to just power limit both the GPUs, which should allow us to still use the 850w PSU.

For (2), the only reasonable solution is to upgrade the case. I went for the LIAN LI LANCOOL 207 due to its airflow performance. There are 2 bottom fans for direct airflow to the GPUs, which would be optimal in my case with 2 GPUs sandwiched one on the other.

Lastly on (3), this one was trickier. I found a Reddit thread where people have tried these 2x10 90-degree pins, but these weren’t useful since the GPU still couldn’t clear the pins. The other solution, which worked, was to use jumper wires from breadboards, trim off a bit of the plastic housing, and this should be low profile enough for the GPU to clear the cable.

I was extremely lucky that the GPU could seat properly even with the stock case fan cables inserted. The cables are touching the GPU heatsink but whatever, there’s nothing I can really do about that.

On a side note, I also upgraded to 128GB RAM by adding another 2x 32GB sticks. This should help quite a bit for memory intensive tasks like data processing and weight offloading.

setup

The next step is to setup the rig. I’m using Tailscale to enable remote SSH access, nvidia-smi to power limit the GPUs, and tinygrad’s open-gpu-kernel-modules for p2p support.

Tailscale

Tailscale makes remote SSH access really easy to setup. With Tailscale, you can configure devices to be part of a VPN, which allows for convenient SSH access over this network. You can find more details on Tailscale setup from this blog.

undervolting GPUs

In a bash script nvidia-pl.sh, you can use these commands to power limit the GPUs.

#!/bin/bash

# enable persistent mode

sudo nvidia-smi -pm 1

# power limit to 225w

sudo nvidia-smi -pl 225And to ensure these commands are run after reboot, edit the crontab by running crontab -e, and adding this line to the file.

@reboot sh /path/to/nvidia-pl.shp2p drivers [wip]

tinygrad has a fork of NVIDIA’s open-gpu-kernel-modules, which is a mod that enables peer-to-peer (P2P) support over PCIe. Ideally, you would want NVLink bridge when you have multiple GPUs in a node, but since my GPUs are not the same size, the NVLink bridge will not fit. This mod should improve performance whenever we shard and partition across GPUs, such as all-reduce or all-gather operations. will benchmark this on nccl in the future!

And here we have it, build is complete!

Edit: 2/15/2025

smart plug

Although I could leave the rig running 24/7, it would be better to have a way to turn the rig on and off as needed - mainly a peace of mind for myself so that it doesn’t burn down my home when I’m away. I bought a smart plug which I can easily control using Apple Homekit. In the BIOS, I configured the rig to turn on when “Restoring from AC power loss.”

Now, I can turn on the smart plug to boot up the rig. To power off, I can run shutdown -h now and turn off the smart plug.